The basic implication of the CAPM is that the expected excess return of an asset is linearly related to the expected excess return on the market portfolio according to the following relation:

This is simply a specific instance of a generic factor pricing model in which the factor is an excess return of a surrogate market portfolio and the test assets are all excess returns of risky assets. The betas are defined by regression coefficients

and the model states that expected returns are linear in the betas :

From the expressions above, it is clear that there are two testable implications with regard to the validity of the CAPM:

[1] All regression intercepts should be individually equal to zero

[2] All regression intercepts should be jointly equal to zero

While there are numerous ways to estimate the model and evaluate the properties of its parameters, this post simply seeks to apply the Gibbons,Ross & Shanken methodology, in both its numerical and graphical incarnations, to a subset of the data. An attempt was made to download price and return data for the constituents of the SP500 since 1995. Data availability issues however constrained the number of assets under examination to 351 in total, with 216 monthly observations across said assets (as well as the index and T-Bill rate). The previous post summarised key return and risk statistics associated with each of these 351 assets with the help of the rpanel package (for control) and the PerformanceAnalytics package (for a host of measures). To implement the GRS test, one has to ensure that the number of test assets used in the process is less than the number of return observations.

For the sake of convenience the (updated) dashboards from the previous blog post are given below.

After estimating a conventional time-series regression for each risky asset, a dashboard of residual diagnostic plots can also be helpful.

dcdc

#Residual diag

windows()

layout(matrix(c(1,1,2,3,1,1,4,5,6,6,7,7),byrow=T,nrow=3,ncol=4))

if (interactive()) {

draw <- function(panel) {

par(mai=c(0,0.3,0.3,0.2))

plot(main=paste('Time Series Regression :',colnames(monthly.ret)[panel$asset],'\n','Alpha= ',round(ts.list$alphas[panel$asset],3),'|| Beta= ',round(ts.list$betas[panel$asset],3)),x=exm.ret,y=ex.ret[,panel$asset],xlab='',ylab='',cex.main=0.85,cex.lab=0.7,cex.axis=0.8,lwd=1,cex=0.75)

legend('topleft',legend=c('Actual','Fitted'),fill=c('black','red'),border.col=NA,bg=NA,cex=0.7,ncol=2)

abline(ts.list$fit[[panel$asset]],col='red',lwd=2)

par(mai=c(0,0.15,0.3,0.2))

qqPlot(ts.list$fit[[panel$asset]],xlab='',ylab='',cex.lab=0.7,cex.axis=0.8,lwd=1,cex=0.75)

par(mai=c(0,0.15,0.3,0.2))

acf(main='',ts.list$resid[[panel$asset]],xlab='',ylab='',,cex.lab=0.7,cex.axis=0.8,lwd=1,cex=0.75)

par(mai=c(0,0.15,0.3,0.2))

chart.Histogram(border='black',ts.list$resid[[panel$asset]][,1,drop=T],methods = c( "add.density", "add.normal"),xlab='',ylab='',cex.main=0.85,cex.lab=0.7,cex.axis=0.8,lwd=1,cex=0.75)

par(mai=c(0,0.15,0.3,0.2))

plot(x=ts.list$fitted[[panel$asset]],y=ts.list$resid[[panel$asset]],xlab='',ylab='',cex.lab=0.7,cex.axis=0.8,lwd=1,cex=0.75)

par(mai=c(0.3,0.3,0.3,0.2))

plot(type='l',ts.list$resid[[panel$asset]],xlab='',ylab='',cex.lab=0.7,cex.axis=0.8,lwd=1,cex=0.75)

legend('topright',legend=c('Residuals'),fill=c('black'),border.col=NA,bg=NA,cex=0.7,ncol=1)

gq.p <- as.numeric(gqtest(ts.list$fit[[panel$asset]])[4])

bp <- as.numeric(bptest(ts.list$fit[[panel$asset]])[4])

sw <- as.numeric(shapiro.test(ts.list$resid[[panel$asset]])[2])

jb <- as.numeric(jarque.bera.test(ts.list$resid[[panel$asset]])[3])

dw<-as.numeric(durbinWatsonTest(ts.list$fit[[panel$asset]])[3])

an <- c('Alpha','Beta','G-Quandt','')

an1 <- c('B-Pagan','S-Wilk','J-Bera','D-Watson')

te <- cbind(an,rbind(round(ts.list$alphas.p[panel$asset],3),round(ts.list$betas.p[panel$asset],3),round(gq.p,3),''))

te1 <-cbind(an1,rbind(round(bp,3),round(sw,3),round(jb,3),round(dw,3)))

tab <- cbind(te,te1)

par(mai=c(0.2,0,0.3,0.1))

TableMaker(row.h=c(1,3),apply(tab,2,rev),c('Measures','P-Values','Measures','P-Values'),strip=T,strip.col=c('red','green'),col.cut=0.10,alpha=0.6,border.col='lightgrey',text.col='black',header.bcol='darkblue',header.tcol='white',title='')

panel

}

panel<- rp.control(asset=1)

rp.slider(panel,asset,1,(ncol(monthly.ret)), action=draw,resolution=1,showvalue=TRUE)

}

The residual diagnostics dashboard covers the conventional issues of [1] fitted vs actual data,[2] normality, [3] residual autocorrelation, [4] heteroskedasticity, [5] stationarity and [6] a table of p-values that are colour coded to reflect rejection (red) or non-rejection (green) of the null hypotheses associated with the named measure for the selected asset, at the 10% significance level. Asset selection is once again done via the rpanel package.

So far we have only concerned ourselves with the first testable implication of the CAPM in the context of a time series regression, namely the estimation and visualisation of residual diagnostics for each of the 351 test assets. The significance of the parameters for each model is assessed by comparing the test statistics to critical values or the p-values to chosen significance levels. For those assets that have an alpha-p-value less (greater) than 0.1, one would (not) reject the null hypothesis that their pricing error was equal to zero at the 10% level of significance.

The second testable implication of the CAPM in a time series framework relates to the condition of pricing errors (alphas) being jointly equal to zero across all test assets. Gibbons,Ross and Shanken (GRS for short) provide a useful methodology to test this condition under assumptions of residual normality,homoscedasticity and independence. The GRS test statistic I tried to replicate takes the following functional form :

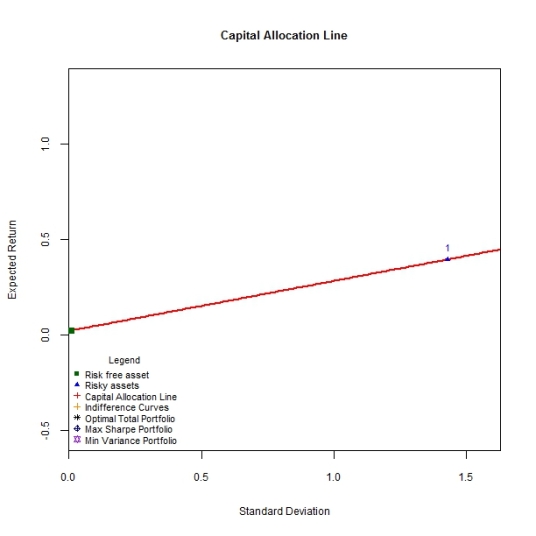

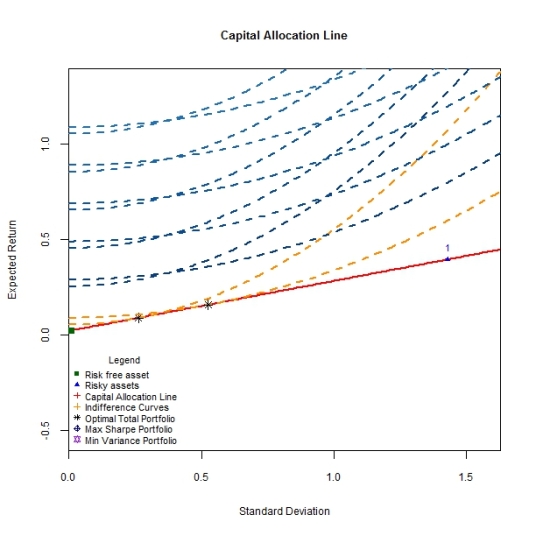

It appears that this statistic can be rewritten in such a way as to provide an intuitive graphical presentation of CAPM validity.More precisely, GRS show that the test statistic can be expressed in terms of how far inside the ex post frontier the factor return is (excess market return in the CAPM).

Disequilibrium in markets implies that prices continue adjusting until the market clears. As prices move, so will asset returns and relative market values, affecting tangency- and market portfolio weights respectively. In the ideal CAPM universe, market and tangency portfolios will eventually converge and every investor will hold the tangency portfolio. Hence for the CAPM to hold, the market portfolio surrogate used in model estimation must not deviate too far, in a statistical sense, from the tangency portfolio.This code snippet calculates the Test statistic,p-value and plots the usual frontiers,assets and portfolios.

#Joint test (use only 200 assets because n.obs > n.assets otherwise)

t.per <- nrow(monthly.ret)

n.ass <- 200

t.term <- (t.per-n.ass-1)/n.ass

alphas <- ts.list$alphas[1:200]

res.cov <- NULL

for(i in 1:200)

{

res.cov<-cbind(res.cov,ts.list$resid[[i]])

}

res.cov.m <- cov(res.cov)

term <- ((1+(mean(exm.ret)/apply(exm.ret,2,sd))^2)^(-1))

a.term <- t(alphas)%*%ginv(res.cov.m)%*%(alphas)

t.stat.g <- t.term*term*a.term

grs.pval<-pf(t.stat.g,200,15,lower.tail=T)

ret.set <- t(as.matrix(colMeans(cbind(bench.ret,monthly.ret[,1:200]))*100))

cov.set <- var(cbind(bench.ret,monthly.ret[,1:200])*100)

risky.asset.data <- list()

risky.asset.data$mean.ret <- ret.set

risky.asset.data$cov.matrix <- cov.set

risky.asset.data$risk.free <- mean(rf.vec)

base<-Frontiers(risky.asset.data)

Frontier.Draw(risky.asset.data,base,rainbow(200),'new',lty=1,paste('Gibbons,Ross & Shanken Interpretation','\n','GRS statistic/pvalue :',round(t.stat.g,3),'/',round(grs.pval,3),'\n','For 200 assets'))

CAL.Draw(risky.asset.data,base,'black',lty=1)

x <- seq(0,30,by=0.01)

lin <- mean(rf.vec)+((((mean(bench.ret)*100)-mean(rf.vec))/(apply(bench.ret,2,sd)*100))*x)

lines(x=x,lin,col='gold',lwd=1.5)

points(x=apply(bench.ret,2,sd)*100,y=mean(bench.ret)*100,col='black',cex=1,pch=17)

text('Market\nReturn',x=apply(bench.ret,2,sd)*100,y=mean(bench.ret)*100,col='black',cex=0.7,pos=1)

The CAPM will always hold if the market proxy is mean variance efficient. For this condition to hold true, the surrogate for the market portfolio should lie on the capital market line, the efficient frontier when a risk free asset is introduced alongside the collection of risky assets. Since the market portfolio is not identifiable, the CAPM cannot be really tested. The market proxy used above, monthly returns to the SP 500 index, does not include factors such as [1] real estate and [2] human capital.